California's AI Chatbot Battle Moves to the Ballot Box

Key highlights this week:

We’re tracking 1,126 bills in all 50 states related to AI during the 2025 legislative session.

Legislative champions of AI safety, California’s Scott Wiener and New York’s Alex Bores, have both announced congressional campaigns.

A bipartisan bill in Congress would require age verification for companion chatbots.

And companion chatbots have emerged as a major issue in California as a measure to regulate the technology aims to be placed on the 2026 ballot, which is the topic of this week’s deep dive.

You thought we were done talking about California AI policy for a while after Gov. Newsom (D) finished signing a dozen AI bills into law, but, similar to last year, the bills that didn’t earn the governor’s signature earned as much attention as those that did. This year, the governor had two AI chatbot bills on his desk that aimed to help protect children, and he chose to enact the less restrictive version of the AI chatbot legislation.

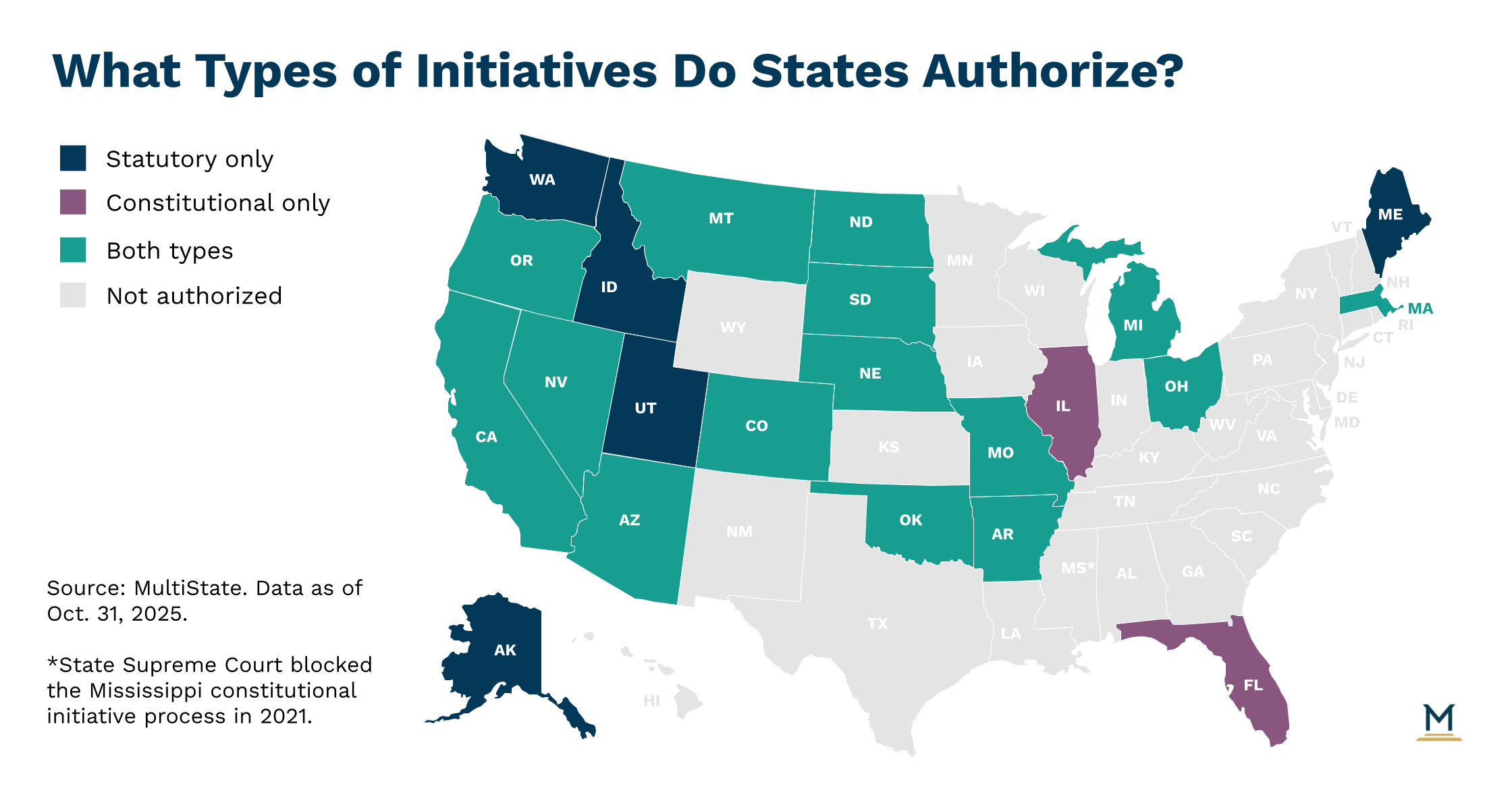

But in California, like in 19 other states, citizens can circumvent the governor and the legislature altogether and enact laws directly through a ballot measure. And a week after Gov. Newsom vetoed the more restrictive AI chatbot bill, supporters of that effort filed a ballot measure that lifts much of the same bill language for the 2026 ballot. Considering the popularity of regulating AI among the general public, I’m surprised an AI ballot measure announcement took this long.

A week after Gov. Newsom vetoed Assemblymember Rebecca Bauer-Kahan’s (D) Leading Ethical AI Development (LEAD) for Kids Act (CA AB 1064), supporters of the measure — led by James Steyer’s kids' safety group Common Sense Media — filed an initiated state statute with the California Secretary of State. The proposal lifts much of the language from the LEAD for Kids proposal, which would have prohibited AI developers from producing a companion chatbot intended to be used by or on a child unless the companion chatbot was not foreseeably capable of doing harm to a child. But the proposed measure also goes beyond the original chatbot bill.

California Kids AI Safety Act

If certified for the ballot and approved by voters, the California Kids AI Safety Act would:

Prohibit operators from making companion chatbots available to children if the chatbots encourage self-harm, violence, illegal activity, or sexually explicit interactions; discourage children from seeking help from licensed professionals; or prioritize mirroring or the validation of the child user over the child user's safety.

Require the Attorney General to establish regulations creating a risk classification system for AI products used by children, with four levels: “unacceptable risk” (products prohibited for children, such as those collecting biometric data or generating social scores), “high risk” (products requiring strict oversight, such as those affecting educational outcomes or targeting ads to children), “moderate risk,” and “low risk.”

Require developers of covered AI products intended for use by children to conduct independent safety audits, register products with the Attorney General, submit audit reports, and display system information labels indicating risk levels.

Prohibit selling or sharing personal information or training AI models on the input of child users without consent.

Create a private right of action for children who suffer actual harm, including serious bodily injury, self-harm, or death, caused by violations of the Act or by social media platforms or AI products, with statutory damages of $5,000 per violation up to $1 million per child, or 3 times actual damages, whichever is greater.

Require schools to prohibit student smartphone use during instructional time by July 1, 2027, and direct funding to support implementation.

Direct the California Department of Education to develop and update an AI literacy curriculum for grades K-12.

The proposed ballot measure would redefine “Companion chatbot” as “a generative artificial intelligence system with a natural language interface that simulates a sustained humanlike relationship with a user” that asks “unprompted or unsolicited emotion-based questions that go beyond a direct response to a user prompt,” among other requirements and a list of exclusions. The proposal defines “child” as a California resident under 18 years of age.

The Governor’s Choice

In his veto statement of the LEAD for Kids legislation, the governor argued that the bill was overly broad to the point that it could effectively ban companion chatbots for minors. Instead, Gov. Newsom signed a narrower AI chatbot bill (CA SB 243) into law, sponsored by Sen. Steve Padilla (D). That law requires companion chatbots to issue a clear and conspicuous notification that the chatbot is not a human. Companion chatbot platforms will also be required to have protocols to address suicidal ideation, suicide, or self-harm content.

The law requires measures to prevent sexual content, and focuses on transparency measures for minor users, including disclosure of the fact that the chatbot is AI, and a notification every three hours discouraging prolonged use. While SB 243 requires disclosure and usage warnings, the proposed ballot measure would prohibit certain chatbot behaviors outright and create liability for harm.

Going Straight to the Voters

Supporters of Assemblymember Bauer-Kahan’s more restrictive chatbot legislation think the voters will approve a more heavy-handed proposal over the governor’s objections. And that’s probably a smart bet. While policymakers have struggled to balance the promotion of AI’s promised benefits with potential and emerging harms, public polling has been more one-sided. And that imbalance is strongly in favor of supporting regulating AI. Polls show this is not a partisan issue and there’s even little difference across demographic segments.

The latest public opinion surveys I’ve seen on AI regulation asked specifically about AI chatbots. The Argument asked if AI companies should be held liable for chatbot outputs, and survey respondents overwhelmingly sided against AI developers (ranging from 72-79% agreement that AI companies should be held liable for various advice).

Children’s Online Safety Meets AI

This particular ballot measure proposal pairs AI with another issue of rising importance with both the public and policymakers: children’s online safety. A rising number of states are requiring age verification to access adult content or social media apps, with stronger laws dictating how social media features should interact with children. These efforts face strong First Amendment challenges in court, but state lawmakers continue to enact stronger and more creative laws despite legal pushback. Just as it was inevitable that AI policy would end up in a ballot measure, children’s online safety trends and AI chatbots were bound to meet as well. Still, AI measures aren’t immune to First Amendment challenges. Earlier this year, a federal judge blocked a California law aimed at restricting AI-generated deepfake content during elections for violating the First Amendment.

Path to the Ballot

While Common Sense Media has already filed its ballot measure proposal with the Secretary of State, that office will need to approve the ballot language before the campaign can begin collecting the required 546,651 voter signatures to qualify for the November 2026 ballot. Those signatures will need to be submitted by next June. The campaign plans to begin signature gathering in December and submit signatures in the spring. Supporters have announced an initial $1 million for the ballot measure campaign from “concerned citizens.” But considering the stakes, expect significantly more spending before voters head to the polls in 2026. Legal challenges are common against ballot measures, and ballot measure campaigns spent nearly $400 million total in 2024 (down from over $700 million in both 2020 and 2022).

Ballot Measure vs. Legislation

Two additional factors merit consideration. First, policymakers already struggle to draft statutory language to regulate a technology that changes so rapidly, and policymaking via ballot measure will exacerbate the delay between drafting language to address the current technology and when that proposed language would actually go into effect. In this case, language drafted in 2025 wouldn’t become law until 2027. Second, making policy through the legislative process allows stakeholders to provide feedback, negotiate, and shape the final law through amendments. Whereas with a ballot measure, once the language is approved for signatures, that’s the language voters will need to decide on. Take it or leave it. One caveat to these concerns: this is an initiated state statute, not a constitutional amendment. So if passed by voters, lawmakers would have an opportunity to amend the statute after it takes effect.

If approved, the California Kids AI Safety Act could become a template for other states to follow — either through legislative adoption or by emboldening advocacy groups in other states with ballot measure processes to pursue similar campaigns. Chatbots have already drawn national scrutiny after lawsuits alleged that AI companions played a role in teen suicides, and congressional hearings this year put tech executives on the defensive over the psychological risks these systems pose to minors. With California once again out front on AI regulation, the measure would test how far public concern about children’s online safety and distrust of tech companies can translate into binding law.

Recent Developments

In the News

State Lawmakers who passed AI Safety bills running for Congress: Two prominent state lawmakers who were lead sponsors of AI safety bills getting out of their legislatures this year have announced runs for Congress. California Sen. Scott Wiener (D) will run for Rep. Nancy Pelosi’s (D) congressional seat in San Francisco, and New York Assemblymember Alex Bores (D) will run for an open seat in New York City to replace retiring U.S. Rep. Jerry Nadler (D). Both made names for themselves at the state level as AI policy leaders and will likely lend that expertise to the federal level if elected to the U.S. House in 2026.

Major Policy Action

Congress: Sens. Josh Hawley (R-MO) and Richard Blumenthal (D-CT) have introduced a bipartisan bill known as The GUARD Act (S. 3062) that would require age verification for companion chatbots. According to a press release from Sen. Hawley, the bill would require AI companions to disclose their non-human status and lack of professional credentials for all users, and criminalize knowingly making available to minors AI companions that solicit or produce sexual content.

Maine: Republican leaders on the Legislative Council accused Democrats of killing a bill that would criminalize AI-created child pornography. But Democrats insist the bill was not approved to be considered for the next session because there is already a more substantive bill on the matter in the Judiciary Committee.

Washington: Gov. Bob Ferguson (D) made an appearance at Seattle AI Week this week, saying his job was “maximizing the benefits and minimizing harms” of AI. As Attorney General, he created an AI Task Force that will issue recommendations later this year.

Notable Proposals

Florida: Among the bills prefiled for the 2026 session is a measure (FL HB 281) that would prohibit the use of artificial intelligence in the practice of psychology, school psychology, clinical social work, marriage and family therapy, and mental health counseling, allowing it only for supplementary support services. Illinois passed a similar bill earlier this year (IL HB 1806).

Pennsylvania: House Democrats have introduced a bill (PA HB 1942) prohibiting “surveillance pricing,” defined as setting prices based on personally identifiable information collected through electronic surveillance technology. California (CA AB 325) became the first state to ban the practice, while New York (NY A 3008/S 3008) requires disclosure.

Wisconsin: A proposed bill in the Assembly (WI AB 575) would prohibit state agencies and local governmental units from using facial recognition technology or data generated from it. The bill makes an exception for identifying employees for employment-related purposes.