Inside Florida's “AI Bill of Rights”

Last month, Florida Gov. Ron DeSantis proposed a wide-ranging “Artificial Intelligence Bill of Rights” that would regulate chatbots, AI data usage, and unauthorized digital likenesses. While President Trump and congressional Republicans have pushed for federal preemption to limit state AI regulation, Gov. DeSantis has bucked his own party by embracing an aggressive state-level role in setting guardrails for artificial intelligence. This session, Florida lawmakers have introduced bills that incorporate many of DeSantis’ proposals. This approach demonstrates an urgency to address potential risks of AI rather than waiting for a uniform federal framework to emerge.

Lawmakers have introduced versions of Florida’s AI Bill of Rights in each chamber (FL HB 1395/SB 482), with the Senate version already receiving committee approval. While the bills enumerate a series of “rights” Floridians are said to possess when interacting with AI, the rights are only “in accordance with existing law” and are not to be “construed as creating new or independent rights or entitlements.”

Instead, the meat of the legislation is in specified use-case regulations of artificial intelligence that can be divided into three categories: chatbots, consumer privacy protections, and digitally created content.

Chatbots

Last week, we wrote about how chatbot bills have been a trending issue already this session, particularly legislation requiring notice to consumers that they are interacting with a chatbot. The Florida bills require disclosure to consumers at the beginning of any interaction between a user and a chatbot, with a pop-up message once every hour during an interaction.

We’ve seen the pop-up notification requirements appear in other chatbot bills, and it was notably a strategy used by Colorado lawmakers in a law that required minor users of social media platforms to display pop-up warnings at 30 minute intervals. That Colorado law was blocked by a federal judge under a preliminary injunction for likely First Amendment violations.

Companion chatbots have raised scrutiny in the state following the death of a Florida teen who committed suicide after he engaged in roleplay with a customized chatbot. In response, the bill would require parental consent for a minor under 18 to become an account holder on a companion chatbot platform. Parents would also need to be given access to all interactions, tools to limit or disable interactions, information about the amount of time a minor spends on the platform, and timely notifications if the minor expresses an intent to self-harm or harm others.

Companion chatbots would need to disclose to minor users that the interaction is with artificial intelligence, with a reminder once every hour to take a break. A platform that knowingly or recklessly violates these provisions is subject to civil penalties and a potential civil action on behalf of the minor.

Notably, the proposed bills omit regulation of therapy chatbots, something Gov. DeSantis proposed back in December.

Consumer Privacy Protections

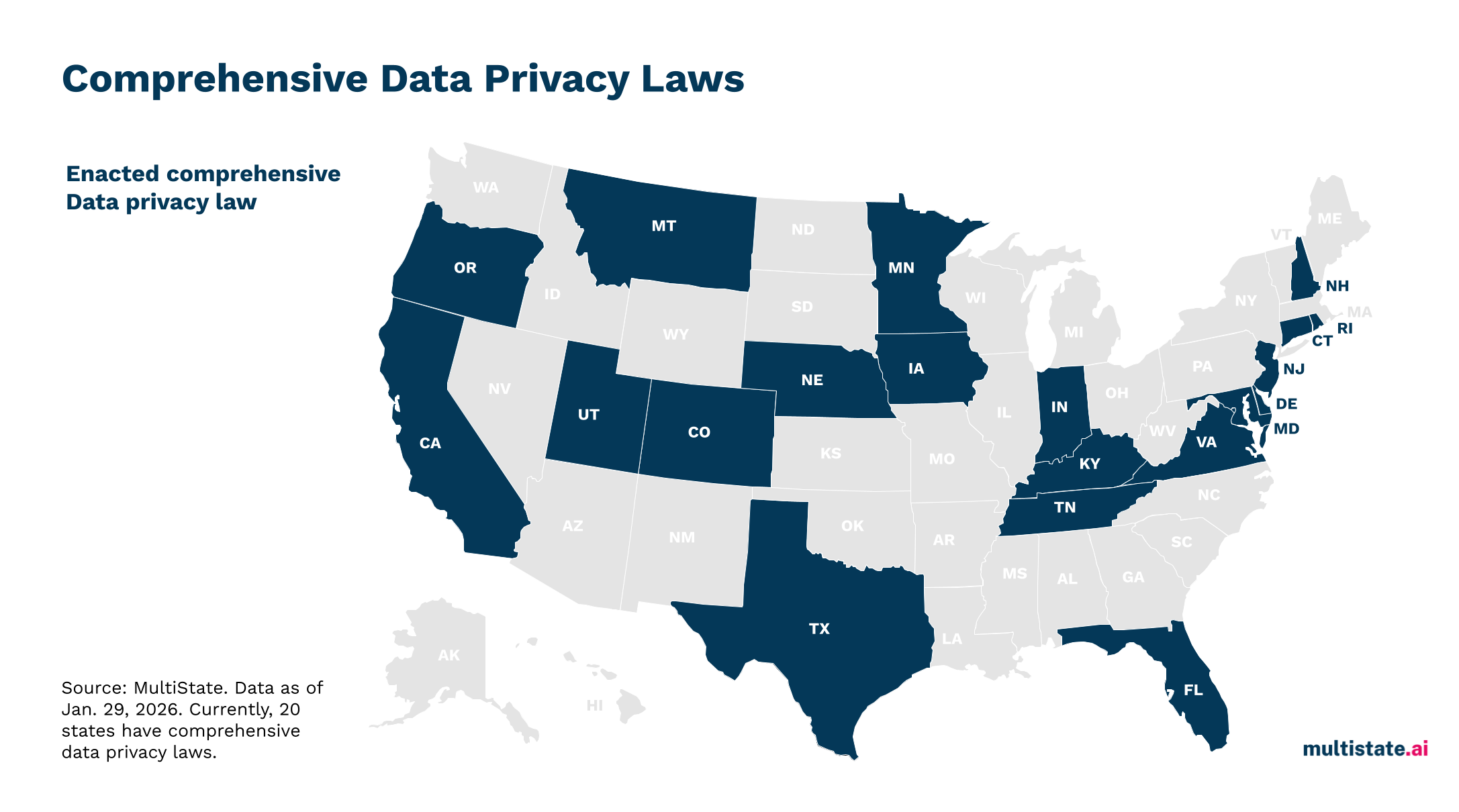

Florida is one of 20 states that currently has a law regulating consumer data privacy, but the scope is limited to certain large tech companies with over $1 billion in global gross annual revenues. These bills would apply to personal data held by any company that produces, develops, creates, designs, or manufactures artificial intelligence technology or products, collects data for use in such products, or implements artificial intelligence technology. The bills would prohibit these companies from selling or disclosing personal information of users unless the data is de-identified. Unlike most other privacy laws, aggrieved individuals would have the right to sue under Florida’s deceptive and unfair trade practices law.

Digitally created content

Florida has already passed sexual and political deepfake laws, but still lacks a bill protecting the unauthorized use of an individual’s digital replica. These bills would apply name, image, and likeness protections to the unauthorized commercial use created by generative AI. The provisions are similar to Tennessee’s landmark ELVIS Act, although they do not include a private right of action.

The bill would also bar the state from contracting with artificial intelligence technology owned by, controlled by, or based in a foreign country of concern. In addition to leaving out measures to prohibit therapy chatbots, the bills do not regulate insurance companies' use of artificial intelligence, as Gov. DeSantis called for. His December proposal also called for regulating the construction of data centers, but those proposals will be included in a separate bill.

What’s next

Sen. Tom Leek (R), who sponsored the Senate bill, said there is still more work to be done on AI, and that the measure is geared towards consumer protection, “not the universe of things that could be done.” If signed into law, it would take effect on July 1, 2026.

Florida’s approach points toward an emerging state-level model for artificial intelligence regulation. After unsuccessful attempts at passing a comprehensive regulatory framework, Connecticut lawmakers are expected to introduce a targeted AI bill that focuses on enumerating prohibited AI practices. Lawmakers in Oklahoma (OK SB 2085) and New York (NY A 3265) have introduced their own versions of an artificial intelligence bill of rights.

That approach reflects growing caution among state lawmakers after President Trump’s executive order explicitly singled out Colorado’s sweeping AI law, giving pause to states considering comprehensive frameworks. Even some Democratic governors and legislators have voiced concerns that economy-wide regulatory regimes may prove too onerous for the industry. Instead, states increasingly appear inclined to pursue narrower, use-case-specific strategies, suggesting that a piecemeal, risk-targeted model may become the dominant template for acting on AI risks.