State AI Chatbot Regulation Tackles Disclosure and Safety in 2026

Weekly Update, Vol. 85.

Key Takeaways

In 2026, state AI chatbot regulation is shifting from broad frameworks to targeted rules, with lawmakers focusing on specific use cases like child safety and professional services.

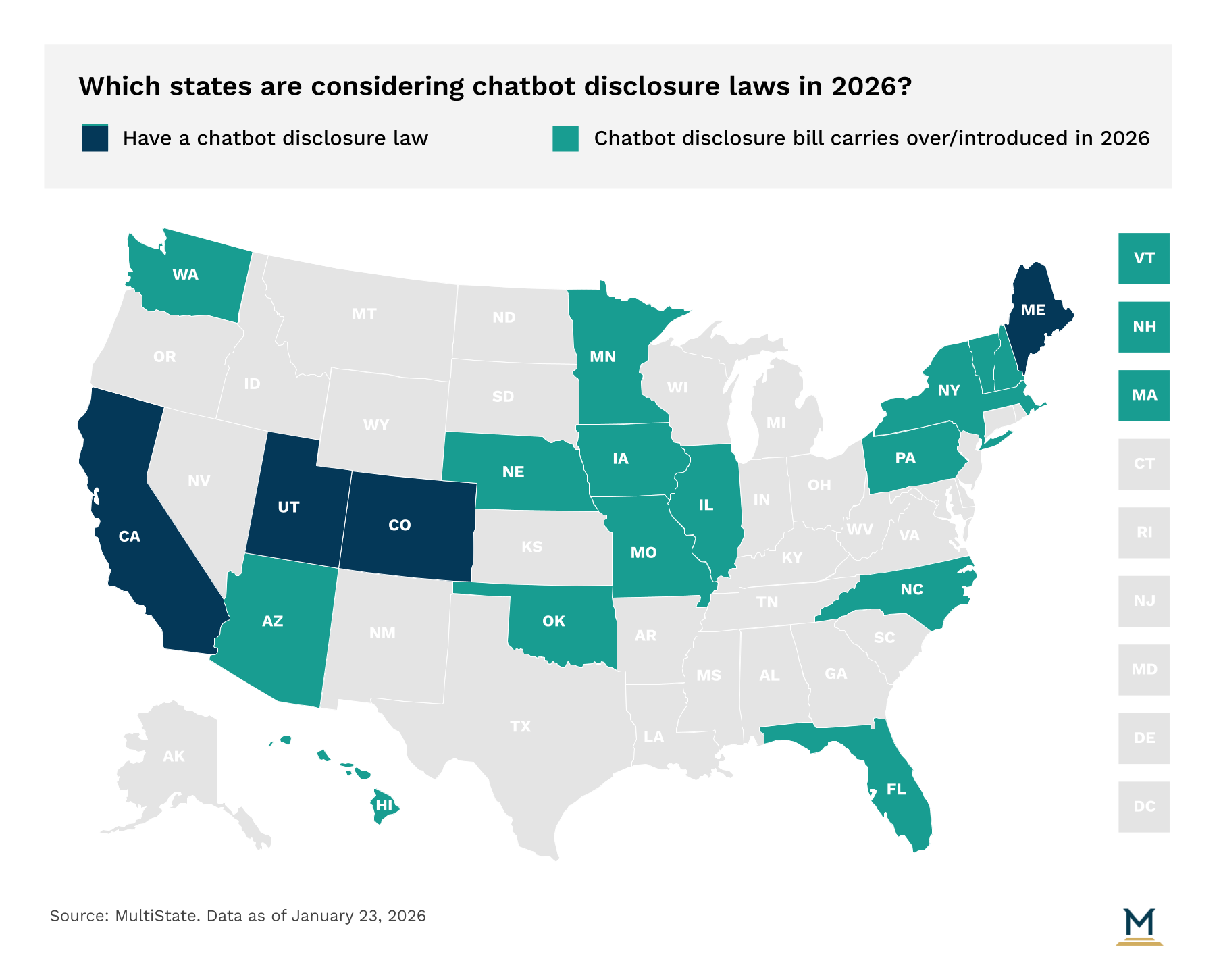

Chatbot disclosure requirements are expanding, with states such as Utah, California, Colorado, and Maine enacting or amending laws to ensure users know when they are interacting with AI rather than a human.

Companion chatbot laws are gaining traction, particularly in California and New York, where new regulations require clear notifications, protocols for self-harm prevention, and additional safeguards for minors.

Mental health chatbot regulation is evolving, with states like Illinois, Nevada, and Utah prohibiting AI from providing independent therapeutic services while allowing limited use under strict disclosure and privacy conditions.

Navigating state AI legislation in 2026 will require businesses to monitor a patchwork of evolving compliance obligations as lawmakers continue to refine chatbot regulations based on risk and context.

If you’re a subscriber, click here for the full edition of this update. Or, click here to learn more about our MultiState.ai+ subscription.

After several years of debating sweeping artificial intelligence bills, state lawmakers in 2026 are shifting toward narrower, use-case-driven regulation, with chatbots at the center of that transition. The growing intersection between AI policy and online child safety is driving that shift in large part, as legislators focus on how conversational systems interact directly with minors. Alongside child safety-focused proposals, states are also advancing chatbot regulations that impose disclosure obligations and guardrails around therapeutic or mental-health-related interactions, further expanding the scope of compliance considerations for businesses.

Chatbots have rapidly become a common feature across consumer apps, customer service platforms, education tools, and wellness services, shifting from experimental deployments to routine points of user interaction in everyday digital life. As their use becomes more prevalent, lawmakers have sought to add guardrails to protect consumers. Thus far, regulation of chatbots has primarily focused on three main areas: disclosure requirements, regulation of “companion” chatbots, and regulation of chatbots that provide professional services.

If you’re a subscriber, click here for the full edition of this update. Or, click here to learn more about our MultiState.ai+ subscription.