An Analysis of 2025 State AI Laws & Federal Preemption

Remember that fun moratorium debate we had this summer? It’s back with a vengeance.

Congressional Republicans, in concert with President Trump, are once again pushing federal preemption of state artificial intelligence laws. U.S. House leaders plan to insert the preemption language into the must-pass annual defense policy bill. Ramping up the pressure on states even further, President Trump ranted against “Woke AI” and a “patchwork of state regulatory regimes” on social media and plans to sign an executive order as soon as today to “enhance America’s global AI dominance through a minimally burdensome, uniform national policy framework for AI.” It’s unclear what this all means for the states at this point. But one thing we can do is to take a step back and examine exactly what the federal government is looking to block states from implementing.

This week, MultiState.ai has released a special report that examines all 136 of the state laws enacted this year that are related to AI and asks: which of these new laws places a mandate on private sector developers and deployers of AI?

READ THE REPORT

2025 Enacted AI Laws: Analysis of Developer & Deployer Mandates

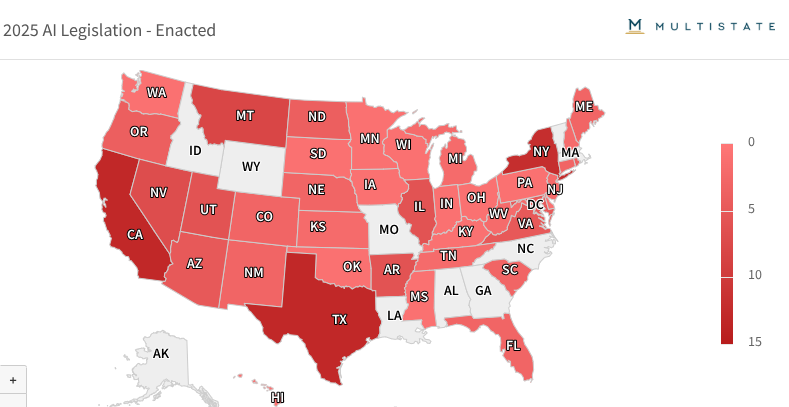

As readers of this newsletter know, this year saw a huge jump in interest in artificial intelligence policy at the state level. Last year, we saw 99 bills enacted into law at the state level after lawmakers introduced 635 bills. This year, MultiState has tracked 136 AI-related bills that states have enacted into law so far during the 2025 legislative sessions.

However, we define “AI-related” broadly (as explained fully in the methodology section of our website) including proposals that relate to AI study committees, regulatory sandboxes, autonomous vehicles, and facial recognition systems. For regulated entities and their government affairs teams, the most consequential provisions of these laws are those that create specific compliance obligations — or mandates — on private sector actors. For this reason, this analysis of 2025 enacted laws will focus on the new AI-related laws that place a mandate on the developer or deployer of an AI system. We’ve written about these terms extensively, but, essentially, a “developer” is an organization building AI systems and a “deployer” is someone using an AI system as a part of their business.

A significant majority (110 of 136 or 69%) of enacted bills this year did not place a mandate on private-sector developers or deployers of AI systems. Instead, most new laws regulate public sector use of AI, the creation and distribution of deepfakes, or did not contain a mandate at all (e.g., budget item to fund school AI education programs).

That left us with 26 of the new laws that we classified as containing some sort of mandate on developers or deployers. We can then sort those laws into “broad” or “narrow” buckets. The most high-profile new law is California’s AI safety law (CA SB 53), which Sen. Wiener (D) was able to get across the finish line this year. This bill, which we’ve written about extensively, mandates broad reporting requirements on “large” and “frontier” AI model developers. In the bucket of “narrow” mandates on developers, the laws we identified fit neatly into two particular categories of concern for lawmakers: chatbots representing themselves as healthcare professionals and companion chatbots.

Deployers of AI systems saw the most mandates in our analysis (16 of 26), but even more so than developers, the mandates on deployers were mostly narrowly focused, which we sorted into a handful of specific categories: healthcare (5 bills), chatbots (3), sexual deepfakes (2), algorithmic pricing (2), schools (1), public utilities (1), and food delivery (1).

I encourage you to read through the full report and scan the specific bills that have been enacted. Of course, the year is not yet through, and we might still see a few more significant AI bills enacted by the few states still technically in session. Plus, Sen. Alex Bores’ RAISE Act (NY SB 6953), a significant AI safety bill on par with CA SB 53, is awaiting the governor’s signature in New York. And finally, clearly, these are not all the AI-related laws on the books in the states, only the laws enacted this year.

Understanding what's actually on the books makes the stakes of the preemption debate clearer. With most state AI laws narrowly focused on specific harms — and only 26 containing broad private-sector mandates — what are federal officials looking to preempt and why?

Preemption Talk is Back: What Are They Saying?

We wrote about the previous attempt by Congress to enact a ten-year moratorium on state AI regulation as a provision in this year’s reconciliation bill. But congressional Democrats balked, and even some Republicans warned that the provision would violate states’ rights. Sen. Marsha Blackburn (R-TN), who is running for governor of Tennessee, pulled her support of a potential compromise five-year moratorium over concerns it would invalidate her state’s landmark digital replica law (Tennessee’s ELVIS Act).

Unsurprisingly, the pushback was even stronger at the state level. A joint letter opposing the moratorium and calling it an “overreach” that "would freeze policy innovation" was signed by 260 state lawmakers in all 50 states. Seventeen Republican governors signed a joint letter calling for the moratorium to be stripped from the budget conciliation bill, touting legislative efforts to enact sensible AI regulation in Republican-led states, such as the AI law passed in Utah and deepfake and digital replica laws enacted in Arkansas. A bipartisan coalition of state attorneys general sent a letter this summer to congressional leaders opposing the proposed ten-year moratorium, arguing it would “be sweeping and wholly destructive of reasonable state efforts to prevent known harms associated with AI.”

That resistance is already resurfacing as Congress returns with a similar proposal. Florida Gov. Ron DeSantis (R), who criticized the moratorium over the summer, has come out vociferously against it this week. On social media, he called a moratorium a “subsidy to Big Tech” and said that inserting it into the defense bill is an “insult to voters.” Governor Spencer Cox (R) of Utah and Sarah Huckabee Sanders (R) of Arkansas also criticized the moratorium proposal.

State leaders argue the moratorium would halt years of work developing guardrails for high-risk AI systems and automated decision tools, leaving a regulatory vacuum at a time when states are confronting algorithmic hiring tools, harmful deepfake content, and consumer profiling technologies. They also point out that Congress is proposing to freeze state authority while offering no federal framework in its place, a dynamic they see as both unworkable and constitutionally suspect.

On the other side, Gov. Jared Polis (D) of Colorado generally supported the moratorium, to "give Congress a chance . . . to create a true 50-state solution to smart AI protections." Gov. Polis’ statement was notable since he put his signature on a first-in-the-nation broad AI regulation (CO SB 205) last year, which has yet to go into effect, and has gone through a series of setbacks and delays. We expect that law to get scaled back during next year’s legislative session before it has a chance to go into effect as currently drafted.

Republicans argue that by allowing state-level regulation, Democrats in California and Colorado can dictate a de facto national standard. The draft executive order that’s been circulated this week calls the new California law (CA SB 53) a “complex and burdensome disclosure and reporting law premised on the purely speculative suspicion that AI might ‘pose significant catastrophic risk.’” It declares the need for AI companies in the United States to innovate without the threat of state regulation, calling for a “minimally burdensome, uniform national policy framework for AI.”

The EO draft takes some ideas from the original moratorium from the reconciliation bill, such as conditioning funding for broadband programs and other discretionary grant programs on whether a state has enacted an AI law that contradicts the order. It would direct the U.S. Attorney General to create an AI Litigation Task Force to challenge state statutes viewed as unlawfully regulating interstate commerce. The FCC would be directed to consider whether to establish a federal AI reporting and disclosure standard that would preempt conflicting state rules, while the FTC must issue a policy statement on how unfair and deceptive practices authority applies to AI models. Finally, the order directs the development of recommendations for a federal AI framework that explicitly preempts state law.

What’s Next?

Importantly, as of this writing, we don’t have legislative language to analyze and only a draft executive order that could change or not be issued at all. We expect the preemption language that could be inserted in the defense bill will be narrower than the language included in the original reconciliation bill in order to convert some red state support and zero in on blue state laws like CS SB 53.

And even if we had clear language and a crystal ball to tell us whether the bill would pass, we still wouldn’t know which of the state laws would be challenged and potentially invalidated by federal preemption. Some laws might be clear-cut, but most will be borderline, and it’ll be up to the courts to decide which state laws will stand.

State lawmakers themselves are unlikely to willingly cede their responsibilities to regulate AI if Congress fails to enact its own regulatory framework. We expect state lawmakers will find clever ways to enact new AI regulations despite federal preemption. And even before we get there, and assuming some form of preemption is enacted, then both the preemption and any potential executive order will surely be challenged in court themselves as unconstitutional.

So we’re either at the beginning of a long fight over federalism or this entire debate will die down once again and we’ll move on to speculating on state AI proposals for 2026. For government affairs teams, the uncertainty argues for scenario planning on both fronts: prepare for compliance with state laws while monitoring federal developments. We’ll continue to keep you informed.